Accelerating Endpoint Inferencing

Machine learning, with the correct hardware infrastructure, may soon reach endpoints.

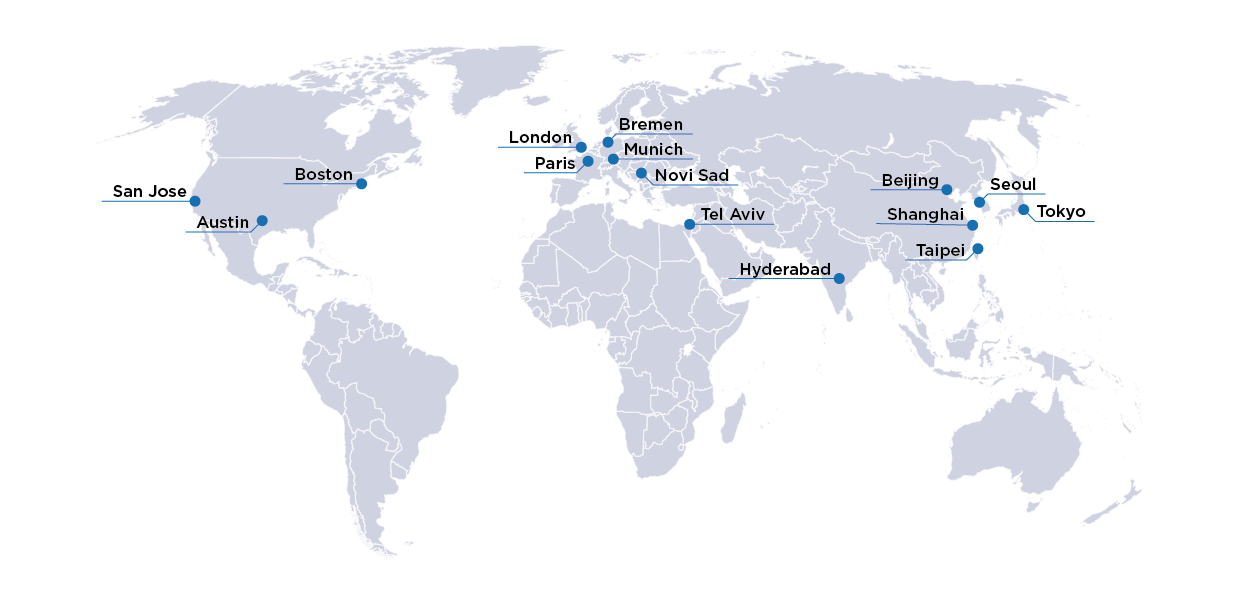

Comments by Raik Brinkmann, President and CEO of OneSpin.

Chipmakers are getting ready to debut inference chips for endpoint devices, even though the rest of the machine-learning ecosystem has yet to be established.

Whatever infrastructure does exist today is mostly in the cloud, on edge-computing gateways, or in company-specific data centers, which most companies continue to use. For example, Tesla has its own data center. So do most major carmakers, banks, and virtually every Fortune 1,000 company. And while some processes have been moved into public clouds, the majority of data is staying put for privacy reasons.

Still, something has to be done to handle the mountain of data heading their way

[...]

...“The trend for people doing edge devices is to include multiple levels of AI. So a simple AI algorithm may detect movement, which powers up the next stage, which may switch to recognition. And if that’s interesting, then it will power up the real computation engine that does something.”